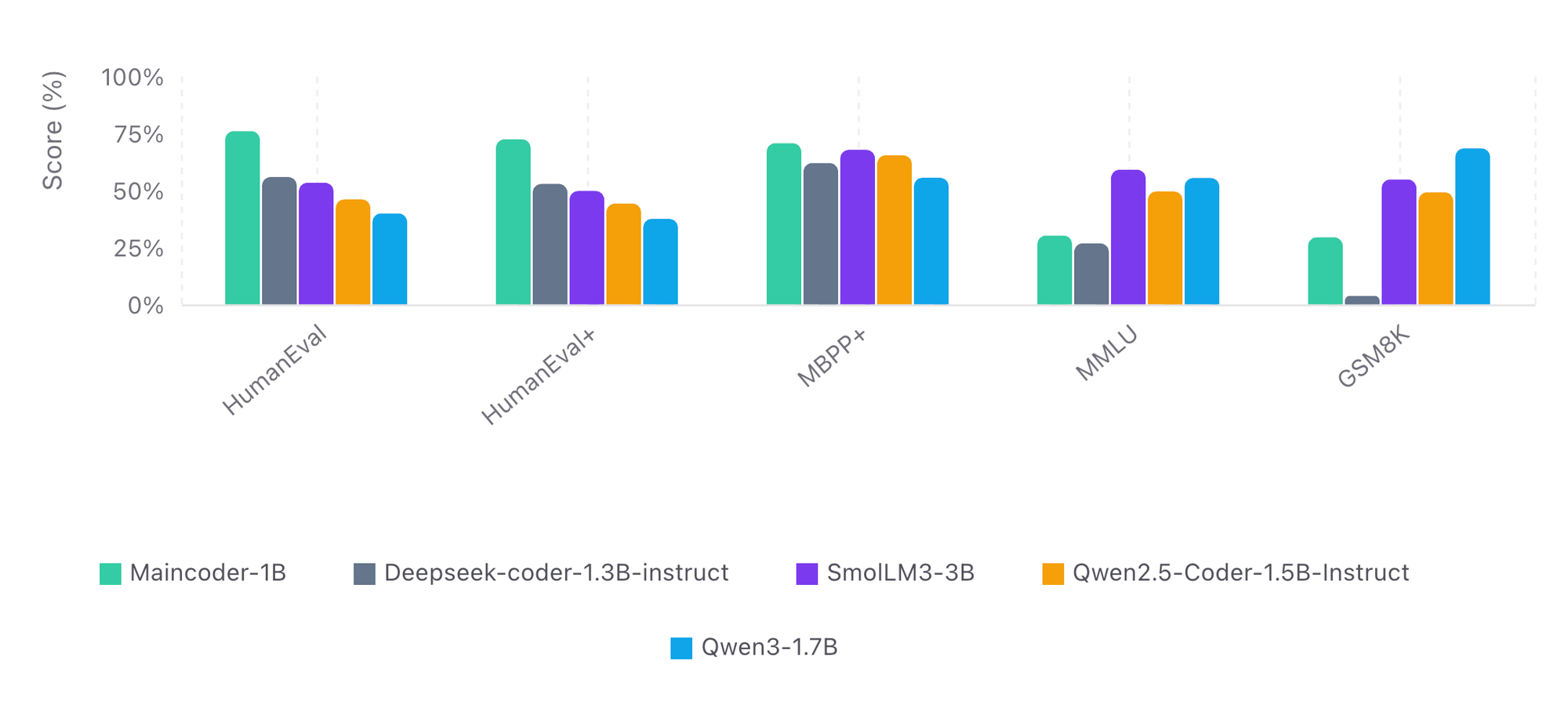

We release Maincoder-1B, a 1B-parameter transformer-based code generation model that achieves state-of-the-art performance among models of comparable size on standard coding benchmarks. Maincoder-1B achieves the best HumanEval performance among all comparably sized open-source models, with a 76% score.

This demonstrates that strong coding capability does not require large model scale, but can instead be achieved through improved data processing at pre-, mid-, and post-training stages, as well as innovations in reinforcement learning based post-training. Maincoder-1B is designed for practical deployment in latency and cost-sensitive settings, including interactive coding assistance, local and on-device inference, large-scale batch code transformation, and systems that require many fast model rollouts such as search or verification-based program synthesis. We make the model weights publicly available and report comprehensive benchmark results.

Small, high-quality coding models play a critical role in modern ML systems and developer workflows. They enable low-latency and low-cost inference, making them well suited for interactive coding assistance, large-scale batch processing, and deployment in cost-sensitive environments. Their efficiency allows them to be used as always-on components rather than occasional fallback tools.

Performance Metrics

Maincoder-1B achieves state-of-the-art results among similarly sized models across a range of widely used coding benchmarks. Here we evaluate on HumanEval, HumanEval+ and MBPP+. Notably, it achieves best-in-class performance on the HumanEval benchmark, which evaluates a model's ability to generate functionally correct Python code for short, well-specified programming tasks of moderate difficulty, with correctness verified via unit tests. This is particularly meaningful for small coding models, as it reflects strong core code synthesis and correctness despite limited capacity. However, the model is not intended for security-critical or safety-critical code without human oversight.

Quantisation and deployment readiness are critical considerations for small coding models intended for real-world use. While this release focuses on full-precision evaluation, Maincoder-1B is designed with deployment in mind and is expected to be compatible with standard post-training quantisation approaches commonly used for small transformer models.

Maincoder-1B is released under the Apache License 2.0. Read the release note in full at: https://maincode.com/maincoder/

Model and documentation can be found via Huggingface: https://huggingface.co/Maincode/Maincoder-1B