At SXSW Sydney, our Designing Matilda event brought together designers, engineers, and researchers who believe AI can be more than efficient. It can be expressive, collaborative, and deeply human.

Hosted by Dr. Amanda Baughan and Kees Bakker, the session explored how Maincode approaches AI UX design: not as a science of control, but a practice of relationship-building. Matilda, Australia’s first large-language model, was our canvas for experimentation.

We shared the six principles that guide every Maincode build from discovery to delivery. Each principle is a stance: that tools shape judgment, that system behavior speaks louder than polish, and that design encodes intelligence.

Move humans forward

AI innovation without the user in mind leads to breakthroughs in a vacuum. This principle matters deeply to us because it sits at the core of what we believe: progress should serve people. We’re not interested in building technology for its own sake. We measure our work by human impact: by how well it removes friction, unlocks new capability, and creates moments of joy, momentum, or connection in people’s lives.

That belief shaped our decision to explore the AFL as a proving ground. Great products don’t always start by solving a problem; sometimes they begin with curiosity. And few things connect Australians like the AFL. It’s more than a sport — it’s culture, ritual, and identity. For us, it was the perfect space to explore how AI could enhance human experience rather than replace it.

We started with a simple question: what would an intelligent AFL experience look like if it truly reflected the energy of the fans? Through exploratory interviews, one insight stood out: people weren’t just looking for information. They wanted immersion, connection, and new ways to experience what they love. That insight defined the opportunity: an AI designed to deepen engagement and bring fans closer to the game, not further away.

Do the hard thing

Two months. A hard deadline. Game on. We shipped — not just an LLM built from scratch, but entirely new modes of interaction alongside it.

The next principle we live by is simple to say and hard to execute: Do the hard thing.

At Maincode, we choose the path that’s right, not just the one that’s fast. Doing the hard thing isn’t a slogan for us; it’s a standard. It means making timeless choices. Turning down shortcuts, easy wins, and copy-paste solutions that might work today but won’t hold up tomorrow.

That mindset shaped how we approached AI interaction design. Instead of treating AI as a single surface, we broke it apart, exploring different interaction types, responses, and contexts. We tested close to 20 widget types, then deliberately narrowed each query down to just 4–6. The goal wasn’t volume; it was clarity. We wanted every interaction to tell a meaningful story about the AFL, whether a user was exploring players, teams, rivalries, or history.

It took longer. It was harder. But the result was an interaction system that felt intentional, stable, and human-centered. That’s what doing the hard thing looks like in practice.

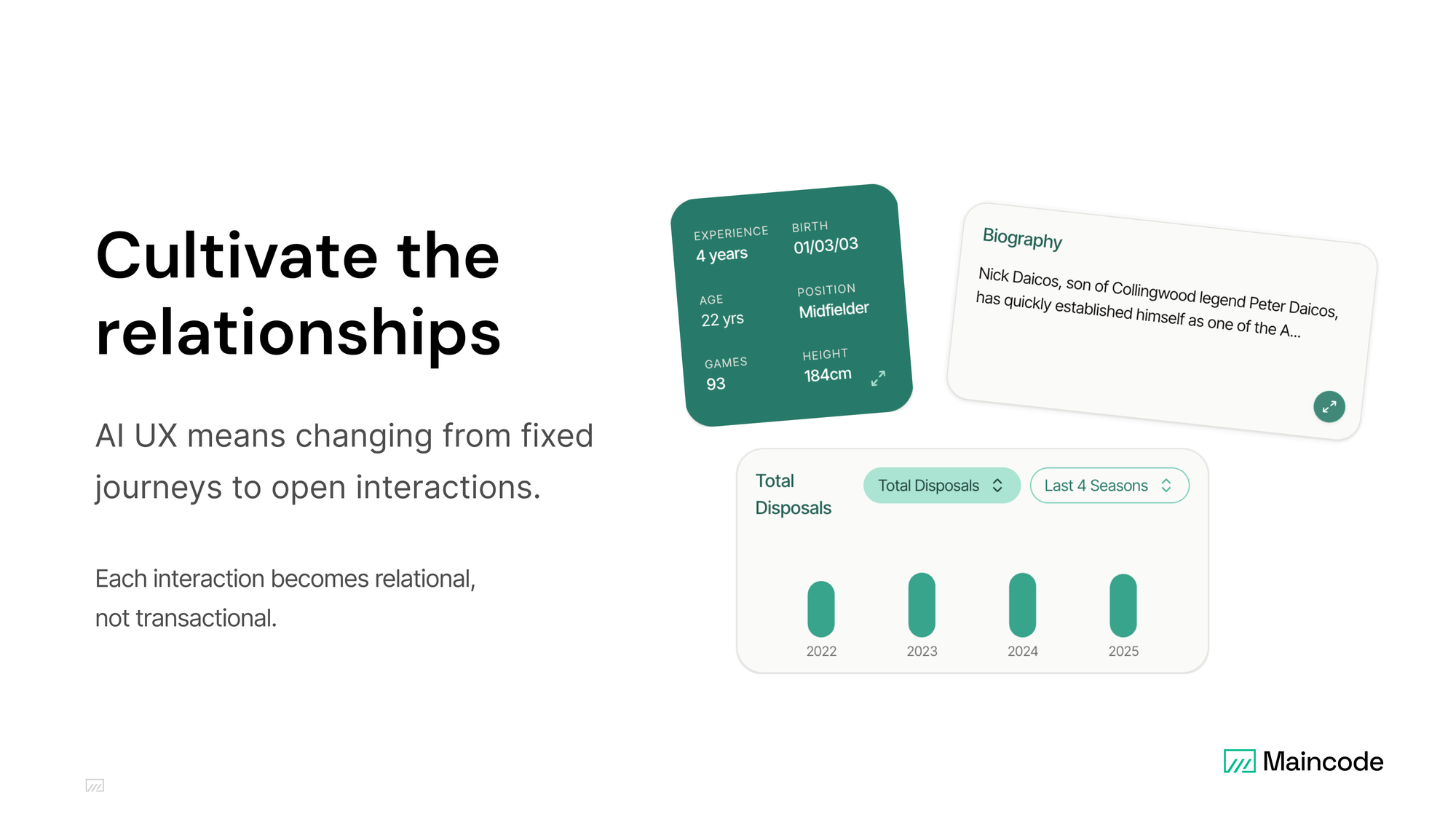

Cultivate relationships with users

With AI, every interaction becomes an input into a larger story. Each response shapes how users perceive intelligence, reliability, and care. The role of UX is to guide that story — setting expectations, signaling progress, listening closely, and failing with grace. When the system gets it wrong, the experience still has to feel right. Each exchange is a moment in an evolving relationship.

That principle shaped how we built Matilda. Established AI products have already defined baseline expectations in terms of speed, accuracy, even personality. For a demo built in two months, the challenge wasn’t to outperform them. It was to earn trust differently.

We focused on the relationship between the user and the AI, not the illusion of perfection. Probabilistic models will miss sometimes, but what matters is what happens next. So we designed moments that build belief: a clear purpose, a consistent voice, and interactions that land with confidence.

We anchored the experience around journeys that always feel strong, like the player overview and comparison flows, where the AI reliably contextualizes stats, surfaces insight, and keeps things human. Around those anchors, we used prompt suggestions to gently guide exploration, keeping the story coherent, even as the model and user explored freely.

The result wasn’t just a good demo. It was a system users could believe in.

Even when users don’t get exactly what they want, they should still want to come back. That’s the real test of trust: not flawless output, but a relationship that holds through imperfection.

Brand is your signature

Brand is your signature, shaped by creative and strategic judgment over time. AI expands what’s possible, but brand doesn’t come from capability alone. It comes from choice, instinct, and the decisions you make when there is no obvious right answer.

The work starts with context over prompts. AI can spot patterns, but meaning, values, and nuance still come from designers. What users feel isn’t driven by visual polish alone, but by system behavior, how something loads, responds, and even fails under pressure. Simplicity becomes critical as complexity multiplies, especially when data is messy or models misfire. And through all of it, judgment remains non-automatable. AI can replicate style, but it can’t explain why something works or decide what should endure. AI may be infrastructure, but brand lives in choice. Build with tools. Lead with judgment.

Engineer with context

Context engineering is the practice of designing everything an LLM sees before it acts. It’s not just prompt writing, it’s framing the problem, setting constraints, shaping memory, and deciding what information matters. The setup defines the response. Quality in leads to clarity out. When models fail, it’s often not because of what they generated, but because of what they were shown. Precision matters more than verbosity. More words don’t create more meaning, but clearer ones do.

To engineer with context is to think in systems, not instructions. Every prior message, retrieved document, filter, and constraint becomes part of the model’s understanding before it speaks. You’re not just telling the model what to do, you’re shaping how it thinks. Designing what the model knows, remembers, and carries forward is invisible work, but it’s where intention turns into outcome. When context is crafted well, the model’s output starts to feel deliberate, coherent, and unmistakably yours.

Remain tool aware

Tool awareness is less about chasing the latest features and more about understanding the full workflow you’re designing within. Every tool, pipeline, and handoff shapes how you think and what you produce. Tools don’t replace judgment, they channel it. The real advantage comes from making those choices consciously, choosing workflows with intent rather than stacking systems by default, and ensuring the process supports the vision instead of obscuring it.

That means making the chain visible rather than mysterious, so teams can see where transformations happen and where human judgment enters. It means optimizing for clarity over endless flexibility, building systems with a strong core and clear edges. And above all, it means letting judgment lead. Your taste, your constraints, and your decisions should be embedded into the workflow itself. In a world of infinite tools, ownership lives in how deliberately you design the process that uses them.

From Principles to Practice

Matilda embodies these principles in action. She’s not built to wow with features, she’s built to explore what feels right when AI meets user experience.

As Amanda noted, “Established AI products have shaped what people expect from AI, but the future of AI interaction is still ours to design.”

If you’re interested in designing the future of AI experiences, Maincode is hiring. Shape how people experience AI through thoughtful design and sharp engineering.